How accurate do you feel your Google Analytics data is? Setting up Google Analytics bot filtering is often the first step towards critical data cleanup. You’ll be amazed at how much traffic will disappear when you apply this filter, but remember quality is so much more important than quantity. You don’t want to waste your time analyzing bad data!

PS: If you’re just looking for the basic filter configuration, click here to jump to it.

Why is clean data important to begin with?

Assuming that you’re making decisions based on your Google Analytics data, you want it to be as accurate and as relevant as possible. If you’re going to add $500 more budget per month to your paid ad campaigns, you want to know for certain that you’re getting the return on investment that you expect. Unfortunately a lot of the information in your Google Analytics account is likely unreliable. There’s just a lot of noise when it comes to traffic numbers!

How do you find bad data?

Discovering the characteristics of bad or simply irrelevant data is the critical piece of cleaning up your numbers. It’s important to establish a few things out of the gate:

- What is the target audience for your site?

- Ex: If I own a bicycle shop in Seattle, WA, I’m most interested in traffic based in the Pacific Northwest region.

- What are your most common methods for traffic acquisition?

- Ex: If I regularly post in a question and answer forum about marketing, I may anticipate some referral traffic from those sites.

- What do you know about your user’s preferences?

- Ex: If most of the traffic driven to and from my website is via my mobile application, I can expect to see a large proportion of traffic on mobile devices.

Establishing these items up front can help you create a customized high quality traffic filter for your website.

Basic characteristics for common Google Analytics bot filtering

Getting into the nitty gritty of what makes up a bad data profile in Google Analytics, these are a few key flags that should make up any clean up filter:

- Language =/= (not set)

- Screen Resolution =/= (not set)

- City =/= (not set)

- Browser size =/= (not set)

All four of these values being (not set) in GA essentially indicates that this likely isn’t a real user device – AKA a bot or other form of automated traffic.

If you’re curious about the technical aspects of how these values are set, Language is set using ISO 639 codes, Screen Resolution reports the width by height of your system’s screen (not to be confused with Browser Size which is the size of your viewport window), and City is based on IP addresses and the associated area per the Internet Service Provider (ISP).

There are a few more flags that you can add in:

- Operating System =/= Linux (sorry, Linux!)

- Screen Resolution =/= 800×600

- Browser size =/= 800×600

- Browser is one of Firefox, Chrome AND Browser Version does not match regex ^[0-5]

These additional flags exclude Linux users (apologies Linux, but a very high rate of fraudulent traffic reports as Linux), users with a suspiciously old school resolution for screen or browser size (800X600), and users of very old Firefox and Chrome browser versions. These unusual patterns are all common fraudulent traffic flags.

As with any high level approach, this method isn’t foolproof and you may end up scraping out some small percentage of valid data. (For example, if you write a blog about Linux, you probably don’t want to exclude Linux OS users from your reporting!) That’s why it’s important to do a validity check as noted in the section below.

Refining your filter

Now that we’ve established a baseline filter definition, it’s important to leverage a few other pieces to ensure your data filter works best for your individual site. What you determined in the “How do you find bad data” section will help you here.

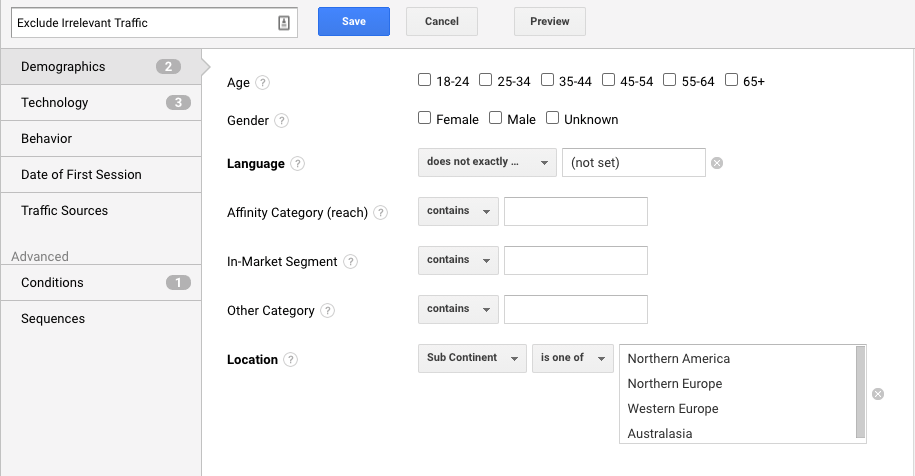

For example: for this site, the owner is focused on North American, European, and Australian users. So they create a filter that is Location > Sub Continent is one of “Northern America, Northern Europe, Western Europe, Australasia”.

Going back to our bike shop example, you could create an even more specific location filter, such as Location > Region is one of “Washington, Oregon, British Columbia”.

This may not seem like a significant addition, but it can help remove the final dregs of less relevant or even fraudulent data in your Google Analytics reporting.

Creating your filter in Google Analytics

Now that we have our full spate of definitions, we can create our Google Analytics bot filtering configuration in the tool itself. This is where the rubber meets the road so to speak – if you skipped ahead to this section, read on for the exact steps you need to apply the standard filter, followed by the custom elements.

How to configure the basic filter

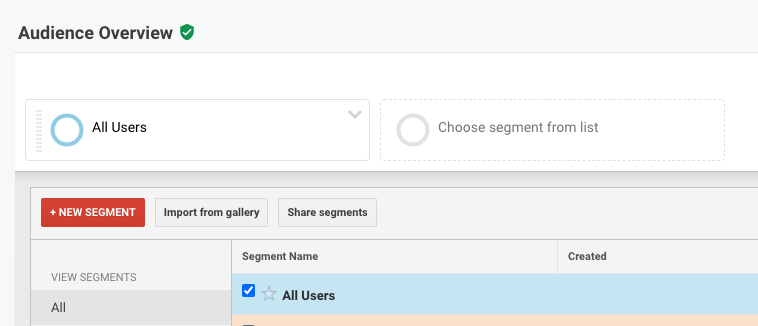

In Google Analytics, navigate to Audience Overview and click the “Add Segment” and then click “+ New Segment”

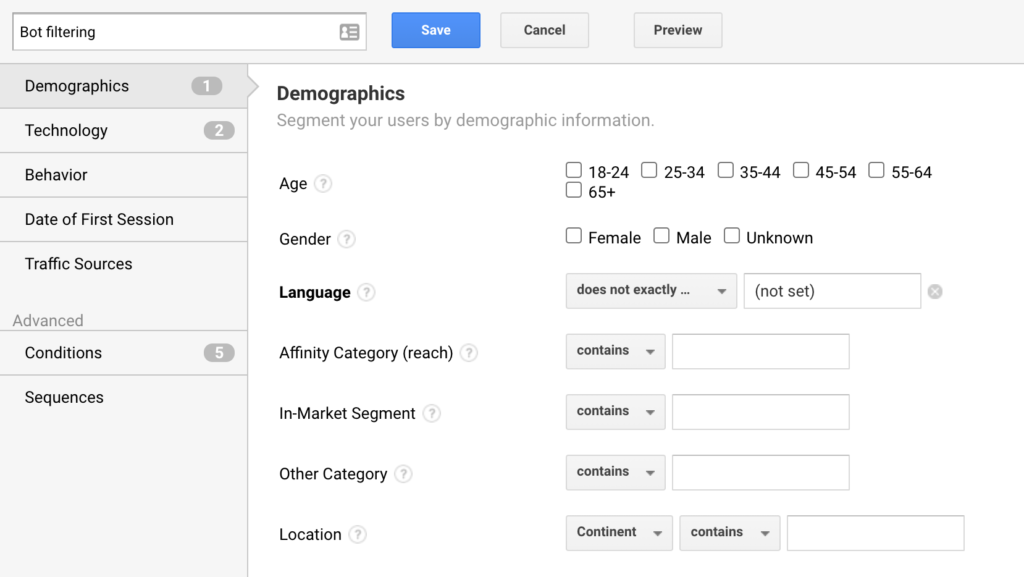

Under the Demographics tab, for Language select “does not exactly match” and enter the value (not set).

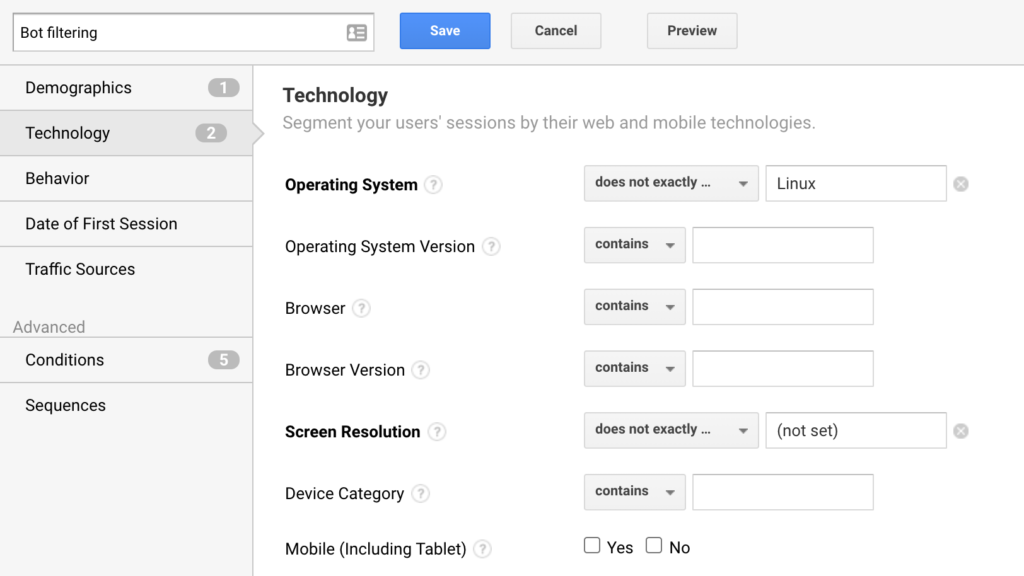

Next, select the Technology tab. Under Operating System, select “does not exactly match” and enter the value Linux.

Under Screen Resolution, select “does not exactly match” and enter “(not set)”.

Finally, we’ll add the majority of the filters under the Conditions tab.

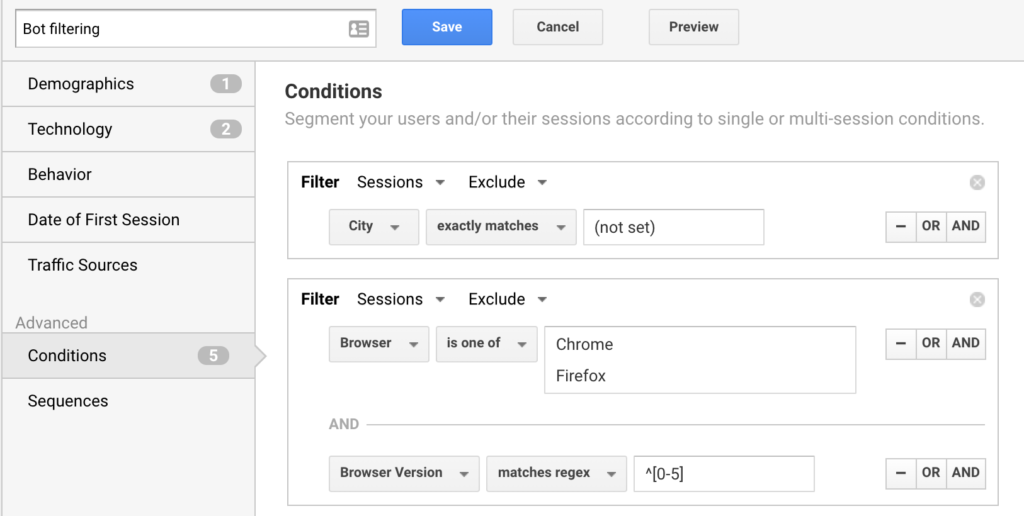

Select Sessions and Exclude, then City “exactly matches” and enter the value (not set).

Next, Select Sessions and Exclude again, then Browser “is one of” and enter Chrome, then a line break, then Firefox. When you start typing these they should pop up with the option to auto complete. Now, add an “AND” operator. Select Browser Version “does not match regex” and enter the value ^[0-5]. This will exclude all Chrome and Firefox versions beginning with 0 through 5.

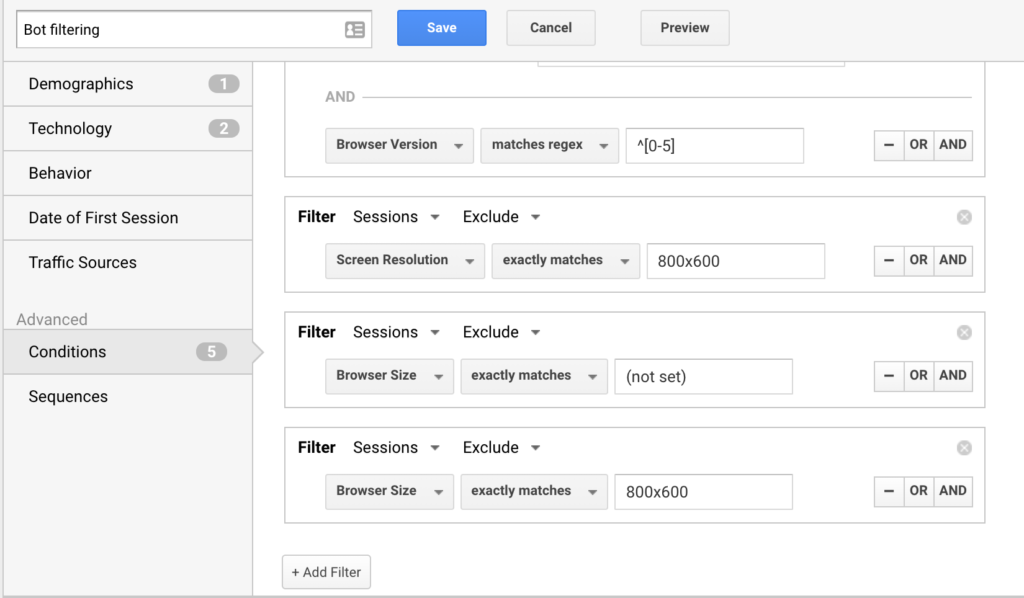

We’ll add three more filters here:

Sessions Exclude Screen Resolution “exactly matches” 800×600.

Sessions Exclude Browser Size “exactly matches” (not set).

Sessions Exclude Browser Size “exactly matches” 800X600.

Click Save when you’ve entered everything.

If you only came here for basic the filter, that’s it – you’re done. ? It’s that easy.

How to configure the relevancy filter

If you want to add some location based relevancy filtering to your Google Analytics bot filtering segment, return to the Demographics tab. Using “Location” you can build a variety of regional filters. Jump back to this section for some suggestions and more details.

Validating and troubleshooting your filter

If you’ve created the filter in Google Analytics, you’ve probably noticed the shift in your numbers when it’s applied. It may be pretty significant. In the case of our example site, only 46.87% of users met the criteria. ? That’s a huge decrease in numbers, but it also points to how insidious fake traffic is and how important Google Analytics bot filtering is for your data integrity.

Shifts to expect in your data

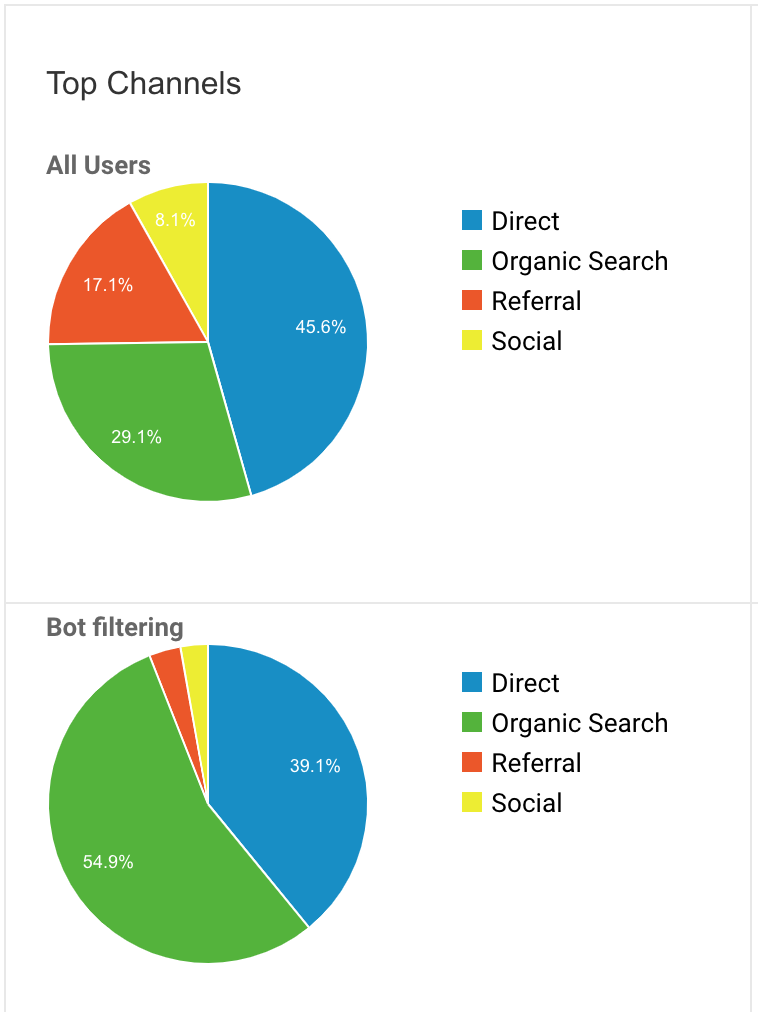

With this filter applied alongside All Users, you can get a quick look at the type of traffic that disappears and how other trends shift. You’ll likely see a significant shift in your Acquisition – Overview breakdown.

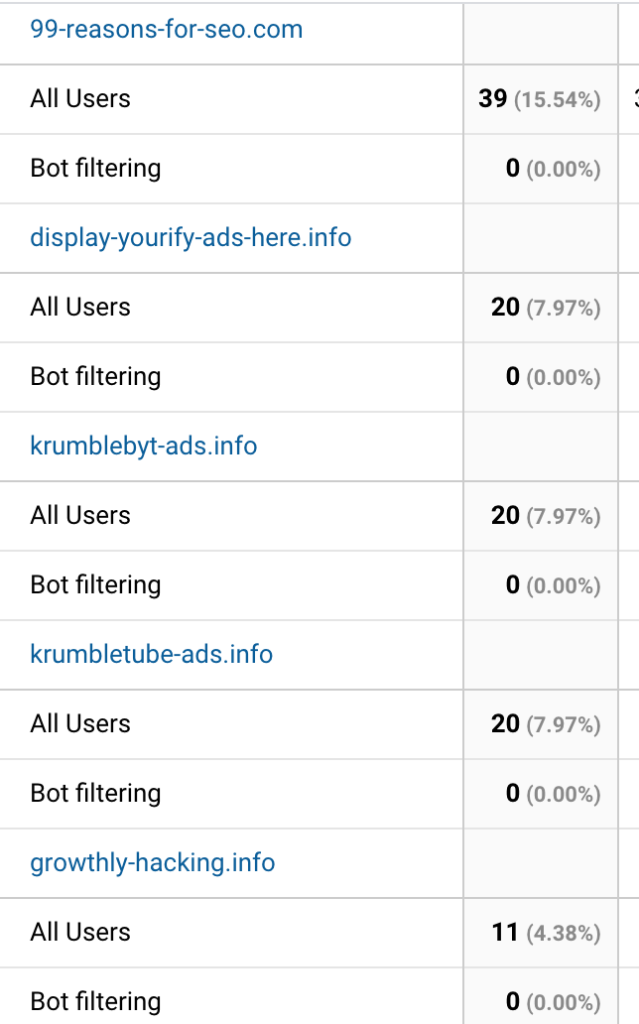

This site is an extreme example as noted before (with more than 50% of traffic stripped away), but you can observe similar patterns in other sites. You’ll likely see a shift towards organic traffic and away from direct traffic, referral traffic, and social traffic. Referral traffic is an especially common source of bots due to what’s commonly called “referral spam” or “referrer spam”.

Common filtering problems

I’ve got zero users left!

Don’t worry! Your traffic likely isn’t 100% bots. Double and triple check the configuration of your filter. Even a single typo can make a huge difference.

I’m still seeing referral spam

Ah, referral spam. Referral spam is a super common issue for data integrity in Google Analytics.

It can be almost impossible to splice out every single bit of referral spam. However, referral spam traffic can actually be uniquely valuable in that it shows you what the unique profile of this traffic is. If you’re still seeing referral spam, use it as a base to improve the filter for your specific site’s unique traffic concerns. Use the “Secondary dimension” drop down to investigate things like device types, operating system versions, and other things that may indicate a pattern of bad traffic.

I’m seeing legitimate conversions filtered out

No filter is 100% one size fits all, so it’s no surprise that you might see some conversions getting caught by this one. Similar to the referral spam issue, this is an opportunity to enhance the filter based on your own data. Look and see what criteria in the filter is causing the converting traffic to be flagged and consider if it would be worthwhile to remove it. You may also want to double-check the configuration of your conversion goals – some, such as pageview based goals, are easier for bot traffic to emulate than others.

Wrapping up

When it comes to Google Analytics bot filtering, there’s no 100% effective, one size fits all solution. Realistically, if your site has a lot of issues with fraudulent traffic, you should work towards filtering that out at a higher level – that data should never be reaching your Google Analytics account. Caching and CDN services such as Cloudflare offer suites of solutions for managing bots.

If you’re curious to learn more about bots and automated traffic and how not all bots are bad, I recommend this article from Cloudflare on “bot management”. There’s a difference between managing bots themselves and how they crawl your site and the methods used here to clean your Google Analytics data.

Thanks for reading, and I hope you found this helpful. Let me know if you have any questions in the comments.

800×600 resolution does not remove this traffic from GA. Have also tried putting in Regex format ^800×600$, etc. but doesn’t seem to work

Hey J, you’ll need to go into Conditions > + Add Filter > Users + Exclude + Screen Resolution + exactly matches + 800×600. That should remove all traffic from 800×600 resolution users.